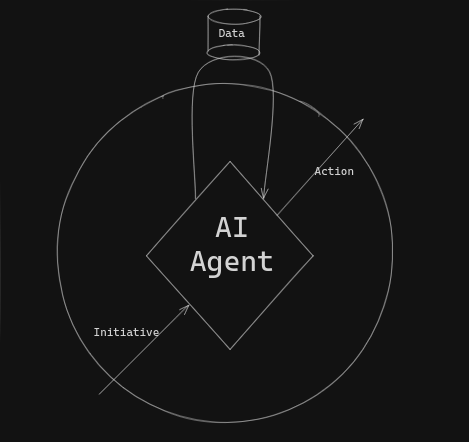

LLM autocompletion has solid logic and reasoning completion. But there are some things a LLM will never be able to do in isolation: integrate real-time real-world data, take action or run itself. But, there are methods, many in use today, that can solve for this. We can use RAG to integrate data into our prompts that get sent to the LLM so we have additional context, essential in avoiding hallucinations and getting quality responses. We can provide a list of actions and some context to the LLM and ask it to choose one of them, then execute those actions for the LLM. And we can do more than just kick off a conversation with the LLM, we can run on a timer or based off an event triggering.

Remember these areas of AI Development as DAI - Data, Action and Initiative.

Strengthening these areas of functionality will lead to better utility, happier customers and ultimately stronger adoption of your product.

Not all applications require equal development of each, but they areas to consider. Let's examine how they work and when to use them.

Data for AI Applications

LLMs are trained on a tremendous amount of data, but their knowledge will always be lagging behind what is known in the real-world, they won't know your private data, and LLMs can get confused about what you're asking for. They're not great at arithmetic or crunching numbers, they may confuse facts. Explicitly including data, facts, stories and context about yourself or business is the number one way to improve the response from LLMs. Arguably more impactful than just a better model.

There are some frameworks that help build RAG applications, like LangChain, but most simply, you can fetch data from somewhere, like from a spreadsheet and insert into your prompt.

For example, if you're wanting to include a list of your best-performing advertising creatives from the past month so you can create more, you could:

-

Connect to Google Ad Words API or Facebook Ads API (or wherever you're running Ads), or even easier, download these as a spreadsheet.

-

Have your application load the creative text from the API or Spreadsheet

-

Sort those by your most important performance metric, maybe number of clicks or return on investment.

-

Insert into the prompt. I like wrapping data with XML strings, but there are different styles that work well.

Here's an example prompt for Advertising Headlines targeting Real Estate Flippers incorporating some top performing ad creatives:

You are my advertisement analyst and creative specialist, skilled at creating ad copy that excites my target market: Real Estate Flippers

Consider the following top-performing advertising creatives:

<TopPerformingAdCreatives>

You don't have to be a pro real estate flipper to have fun doing deals, guaranteed.

New, The Secret to Automatically finding great deals fast, guaranteed.

Discover the New Way to find Great Deals Fast

</TopPerformingAdCreatives>

Now, create 10 great advertising headlines like these.

As your application creates new headlines and they are tested, automatically inserting the new top performers into this prompt ensures you're always trying out better and better headlines. But, maybe you want to automatically test these headlines instead of needing to copy out of ChatGPT and copy in to your advertising platform. That's where Actions come in.

Actions for AI Applications

Allow your service to do more than just respond! Maybe it can, as in the example above, automatically create some new creatives in your advertising platform with a small budget to test them. Or maybe it should submit a blog post or newsletter draft for you to review before submission. Or, just send an email to the right person for them to assess and handle the next step. Not all AI needs to be in an AI conversation!

Many LLMs are beginning to support some form of Structured Output (read more on Structured Outputs so your application can easily handle the response in code.

Here's an example code that would run the above prompt, but return these in a way that can be handled via code:

from pydantic import BaseModel

from openai import OpenAI

client = OpenAI()

class HeadlinesResponse(BaseModel):

headlines: list[str]

completion = client.beta.chat.completions.parse(

model="gpt-4o-2024-08-06",

messages=[

{"role": "user", "content": PROMPT}

],

response_format=HeadlinesResponse,

)

headlines = completion.choices[0].message.parsed

Now, I can iterate over these headlines to upload them to my Ad Platform. In this way, your AI Application (or AI Agent) is actually "embodied" in its environment, enabling it to take action. But, how will it eventually evaluate the success and failure of these headlines? Do you want to go back in to run it every week or should the AI run itself? Let's examine Initiative next.

Initiative for AI Applications

Don't be a slave to the AI! You don't need to go to ChatGPT and start talking to it to run AI every time. Computers are good at running something based on an event like seeing a news article or a sale or a form submission. They're also really good at running on a schedule like once a day or on every Monday morning or on the 3rd of every month. Let the computer establish the discipline of running the task instead of wasting your time with some recurring task on your calendar that you'll eventually ignore.

Handle incoming events, like a form submission, to begin executing the process. Or just run it once a day with a cronjob or something more complex. Or, if you're no-coding it, platforms like Make, Zapier or PyroPrompts enable automatically triggering AI in various ways.

You don't expect to need to ask an employee to do something every day, you tell them once they need to do it every day and you expect them to do it! AI is the same!

Closing Remarks

These areas of development will evolve your AI Application or AI Agent from just an LLM Wrapper to something with differentiated value. LLM services generally aren't adding this functionality directly, but, like with OpenAI's GPTs, you can have ChatGPT integrate with your service which does these things to deliver value.

DAI - Data, Action, Initiative gives you a framework to reference when developing your applications to ensure you're considering valuable areas of functionality.

Are you developing AI Applications with these areas of functionality? Let me know on LinkedIn or X or email me at matt [at] pyroprompts.com.